A Beginner's Guide to Vector Embeddings

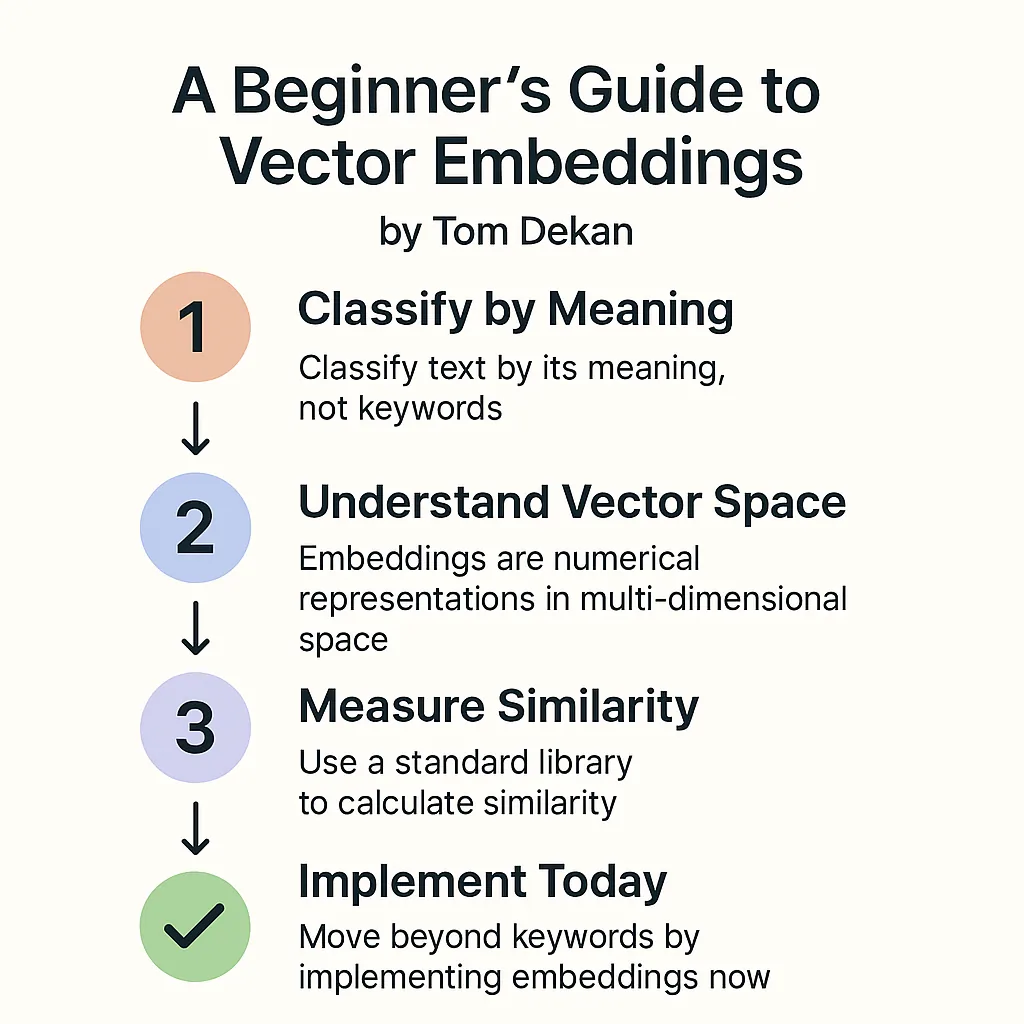

This post provides a simple, practical guide on how to create vector embeddings. I'll cover generating text embeddings and using them to compare the meaning of text by calculating a standardized similarity score.

(Modern tech in 2026 means that calculating similarity with embeddings is really easy and cheap to do with common providers).

I'll illustrate my points based on solving a problem at our startup, Stackfix. See below for copy-and-pastable code or a github repo containing the ready-to-run code.

The Problem: How to classify text by meaning

I wanted a standardized score showing how closely any paragraph fits within one of our 7 rating categories (which we use for testing software products). This required understanding the meaning of the paragraph, not just keywords.

Once I knew what category the text fits in, I could process the text further in a more fine-grained way. This guide shows how creating vector embeddings solved this.

I'll show you how to use vector embeddings simply to compare text, using:

- embeddings from an external API

- calculating similarity with cosine similarity (a standard function)

Other solutions to detect similarity (which work poorly for my task)

My aim is always to start with the simplest solution, working upwards in complexity if the simpler solutions don't fit.

Rejected approach: Text matching

The simplest approach. But simply matching text doesn't solve my problem because it ignores meaning and focuses on exact matches. There'll be a lot of variation in the text that I want to classify. Different wording or synonyms would be missed entirely. So, this is unsuitable.

Rejected approach: Word Frequency and Probabilistic Models

Counting word occurrences per category and probabilities would be more flexible but still limited.

Achieving sufficient accuracy could require complex probabilistic models (e.g., determining the expected word proportions for each category). This might still fail to grasp subtle nuances in meaning. This would likely be a complex and brittle approach. Unsuitable.

Rejected approach: Using an LLM directly

This is a great solution for many problems, but doesn't work well here due to a lack of precision.

LLMs understand meaning intuitively but don't quantify similarity in a standardized way.

This is similar to how humans detect similarity. When asked to score the similarity between chunks of text, neither the human nor the LLM can give a standardized score.

If someone asks you whether the word 'king' is more similar to 'man' than the word 'queen', you would know the answer. But you wouldn't be able to give a standardized score for that similarity. Neither would an LLM. So, this approach alone is unsuitable for my problem.

What Are Vector Embeddings?

Vector embeddings are numerical representations of text (or other data types like images) in a multi-dimensional space. Embeddings capture the semantic meaning of words, allowing machines to understand similarities between concepts.

Creating Vector Embeddings Step-by-Step

Here's the core process for how to create vector embeddings and use them:

-

Generate Embedding for Text A (e.g., Your Categories)

Convert each category description into an embedding vector using an embeddings provider (e.g., OpenAI, Cohere, Voyage AI). This vector represents the text's location in the provider's high-dimensional meaning space.

-

Generate Embedding for Text B (e.g., Your Input Paragraph)

Send the text you want to classify (e.g., "Attio is enjoyable for the user due to its smooth interface...") to the same embeddings provider to get its vector representation.

-

Calculate Cosine Similarity

Compute the cosine similarity between the vector for Text A and the vector for Text B. This mathematical function measures the angle between the vectors, giving a score (typically -1 to 1) indicating semantic closeness. A score of 1 means they are extremely similar in meaning.

This process allows us to compare our input paragraph against each category description, getting a numerical score for how well it fits each one.

Here's the TypeScript code implementing this approach:

import dotenv from 'dotenv'

import { OpenAI } from 'openai'

// Load environment variables (like API keys) from a .env file

dotenv.config()

// Initialize the OpenAI client with the API key from environment variables

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY, // Ensure your OPENAI_API_KEY is set in .env

})

// Define the categories we want to classify text into, with descriptions

const categories: Record<string, string> = {

ease_of_use:

`How intuitive and user-friendly the product is.

Includes navigation flow, clarity of UI elements, and minimal learning curve.`,

functionality:

`How well the product performs its core functions.

Includes feature completeness, reliability, and performance.`,

ease_of_setup:

`How simple it is to install, configure, and get started with the product.

Includes documentation quality and initial onboarding.`,

look_and_feel:

`The visual appeal and aesthetic quality of the interface.

Includes design consistency, visual hierarchy, and emotional response.`,

}

/**

* Generates a vector embedding for the given text using the OpenAI API.

* @param text The input string to embed.

* @returns A promise that resolves to an array of numbers representing the embedding.

*/

async function getEmbedding(text: string): Promise<number[]> {

const response = await openai.embeddings.create({

model: 'text-embedding-3-small', // Specify the desired embedding model

input: text.replace(/\n/g, ' '), // API best practice: replace newlines with spaces

})

if (

!response.data ||

response.data.length === 0 ||

!response.data[0].embedding

) {

throw new Error('Failed to retrieve embeddings from OpenAI API.')

}

return response.data[0].embedding

}

/**

* Calculates the dot product of two vectors (must be same length).

* Helper function for cosine similarity.

* @param vecA First vector.

* @param vecB Second vector.

* @returns The dot product.

*/

function dotProduct(vecA: number[], vecB: number[]): number {

if (vecA.length !== vecB.length) {

throw new Error('Vectors must have the same length for dot product')

}

let product = 0

for (let i = 0; i < vecA.length; i++) {

product += vecA[i] * vecB[i]

}

return product

}

/**

* Calculates the magnitude (or Euclidean norm) of a vector.

* Helper function for cosine similarity.

* @param vec The input vector.

* @returns The magnitude of the vector.

*/

function magnitude(vec: number[]): number {

let sumOfSquares = 0

for (let i = 0; i < vec.length; i++) {

sumOfSquares += vec[i] * vec[i]

}

return Math.sqrt(sumOfSquares)

}

/**

* Normalizes a vector (scales it to have a magnitude of 1).

* Helper function for cosine similarity.

* @param vec The input vector.

* @returns The normalized vector.

*/

function normalize(vec: number[]): number[] {

const mag = magnitude(vec)

if (mag === 0) {

// Avoid division by zero; return a zero vector if magnitude is 0

return new Array(vec.length).fill(0)

}

// Divide each component by the magnitude

return vec.map((val) => val / mag)

}

/**

* Calculates the cosine similarity between two vectors.

* Measures the cosine of the angle between them, indicating similarity in direction (meaning).

* Result is between -1 (opposite) and 1 (identical), 0 (orthogonal/unrelated).

* @param vecA First vector.

* @param vecB Second vector.

* @returns The cosine similarity score.

*/

function cosineSimilarity(vecA: number[], vecB: number[]): number {

// Normalize both vectors to ensure the result is purely based on direction, not magnitude.

const normalizedA = normalize(vecA)

const normalizedB = normalize(vecB)

// The cosine similarity is the dot product of the normalized vectors.

const similarity = dotProduct(normalizedA, normalizedB)

// Clamp the result to [-1, 1] to handle potential floating-point inaccuracies.

return Math.max(-1, Math.min(1, similarity))

}

/**

* Classifies the input text by calculating its similarity to predefined category descriptions.

* @param text The input text to classify.

* @returns A promise resolving to an object mapping category names to similarity scores.

*/

async function classifyText(text: string): Promise<Record<string, number>> {

// Ensure the API key is available

if (!process.env.OPENAI_API_KEY) {

throw new Error('OPENAI_API_KEY is not set in the environment variables.')

}

// 1. Get the embedding for the input text.

const textEmbedding = await getEmbedding(text)

// Object to store the similarity results

const results: Record<string, number> = {}

// 2. Get embeddings for all category descriptions (in parallel for efficiency).

const categoryPromises = Object.entries(categories).map(

async ([category, description]) => {

const categoryEmbedding = await getEmbedding(description)

// 3. Calculate similarity between input text and each category.

const similarity = cosineSimilarity(textEmbedding, categoryEmbedding)

// Store the result

results[category] = similarity

},

)

await Promise.all(categoryPromises)

// Sort the results by similarity score in descending order.

const sortedResults = Object.fromEntries(

Object.entries(results).sort(([, a], [, b]) => b - a),

)

return sortedResults

}

// Example usage: Demonstrates how to use the classifyText function.

async function main() {

const sampleText =

'The interface is clean and intuitive. Users can navigate through the application ' +

'without having to read documentation. Most tasks can be completed in just a few clicks, ' +

'and the workflow feels natural even for first-time users.'

try {

const results = await classifyText(sampleText)

console.log('Classification results:')

Object.entries(results).forEach(([category, score]) => {

console.log(`${category}: ${score.toFixed(4)}`)

})

const topCategory = Object.keys(results)[0]

console.log(

`\nBest matching category: ${topCategory} with score: ${results[topCategory].toFixed(4)}`,

)

} catch (error) {

console.error(

'Error during classification:',

error instanceof Error ? error.message : error,

)

}

}

// Run the example function

main()Conclusion

Creating vector embeddings provides a powerful way to quantify the semantic meaning of text, enabling tasks like classification, search, and clustering. By generating text embeddings (representing meaning as vectors) and using measures like cosine similarity, we can build sophisticated AI features relatively easily using modern APIs.

This technique moves beyond simple keyword matching to understand the underlying concepts in text.

On that point, I note that Vercel's AI SDK looks nice for this:

FAQ

Q: How do I choose an embedding model?

A: Consider the task (e.g., classification vs. search), performance benchmarks (like the MTEB leaderboard), dimensionality (higher dimensions capture more nuance but require more storage/computation), and cost.

Q: Can I create vector embeddings for languages other than English?

A: Yes, many modern embedding models are multilingual. Check the documentation for the specific model (e.g., OpenAI's text-embedding-3-small supports many languages).

Q: What's the difference between embeddings and fine-tuning an LLM?

A: Embeddings provide a fixed numerical representation of meaning. Fine-tuning adapts an LLM's internal parameters to perform better on a specific task, often requiring more data and computation.

Further reading:

- Here's a great introduction to embeddings - Shows how we capture the meaning of sentences as numbers (vectors).

- Here's a great introduction to cosine similarity - Shows how cosine similarity measures the relationship between word vectors.

- Here's a great article on the potential limitations of cosine similarity and how to minimize them by adding an LLM to standardize your text.